Abstract

I recently moved our primary nameserver from orff.debian.org, which is

an aging blade in Greece, to a VM on one of our ganeti clusters. In the

process, I rediscovered a lot about our DNS infrastructure. In this post,

I will describe the many sources of information and how they all come

together.

Introduction

The Domain Name System is the hierarchical database and query protocol that is in use on the Internet today to map hostnames to IP addresses, to map the reverse thereof, to lookup relevant servers for certain services such as mail, and a gazillion other things. Management and authority in the DNS is split into different zones, subtrees of the global tree of domain names.

Debian currently has a bit over a score of zones. The two most

prominents clearly are debian.org and

debian.net. The rest is made up of debian

domains in various other top level domains and reverse zones, which

are utilized in IP address to hostname mappings.

Types and sources of information

The data we put into DNS comes from a wide range of different systems:

- Classical zonefiles maintained in git. This represents

the core of our domain data. It maps services like

blends.debian.orgtostatic.debian.orgor specifies the servers responsible for accepting mail to@debian.orgaddresses. It also is where all theftp.CC.debian.orgentries are kept and maintained together with the mirror team. - Information about

debian.orghosts, such asmaster, is maintained in Debian's userdir LDAP, queryable using LDAP[^ldap].- This includes first and foremost the host's IP addresses (v4 and v6).

- Additionally, we store the server responsible for receiving a host's

mail in LDAP (

mXRecordLDAP attribute, DNSMXrecord type). - LDAP also has some specs on computers, which we put into each host's

HINFOrecord, mainly because we can and we are old-school. - Last but not least, LDAP also has each host's public ssh key, which we extract into SSHFP records for DNS.

- LDAP also has per-user information. Users of debian infrastructure

can attach limited DNS elements as

dnsZoneEntryattributes to their user[^ldap2]. - The auto-dns system (more on that below).

- Our puppet also is a source of DNS information. Currently it

generates only the

TLSArecords that enable clients to securely authenticate certificates used for mail and HTTPS, similar to howSSHFPworks for authenticating ssh host keys.

Debian's auto-dns and geo setup

We try to provide the best service we can. As such, our goal is that,

for instance, user access to www or bugs should always

work. These services are, thus, provided by more than one machine on

the Internet.

However, HTTP did not specify a requirement for clients to re-try a different server if one of those in a set is unavailable. This means for us that when a host goes down, it needs to be removed from the corresponding DNS entry. Ideally, the world wouldn't have to wait for one of us to notice and react before they can have their service in a working manner.

Our solution for this is our auto-dns setup. We maintain a list of hosts that are providing a service. We monitor them closely. Whenever a server goes away or comes back we automatically rebuild the zone that contains the element.

This setup also lets us reboot servers cleanly — since one of the

things we monitor is "is there a shutdown running", we can, simply by

issuing a shutdown -r 30 kernel-update, de-rotate the machine in

question from DNS. Once the host is back it'll automatically get

re-added to the round-robin zone entry.

The auto-dns system produces two kinds of output:

- In service-mode it generates a file with just the address records

for a specific service. This snippet is then included in its zone

using a standard bind

$INCLUDEdirective. Services that work like this includebugsandstatic(service definition for static). - In zone-mode, auto-dns produces zonefiles. For each service it

produces a set of zonefiles, one for each out of a set of different

geographic regions. These individual zonefiles are then transferred

using

rsyncto our GEO-IP enabled nameservers. This enables us to give users a list ofsecuritymirrors closer to them and thus hopefully faster for them.

Tying it all together

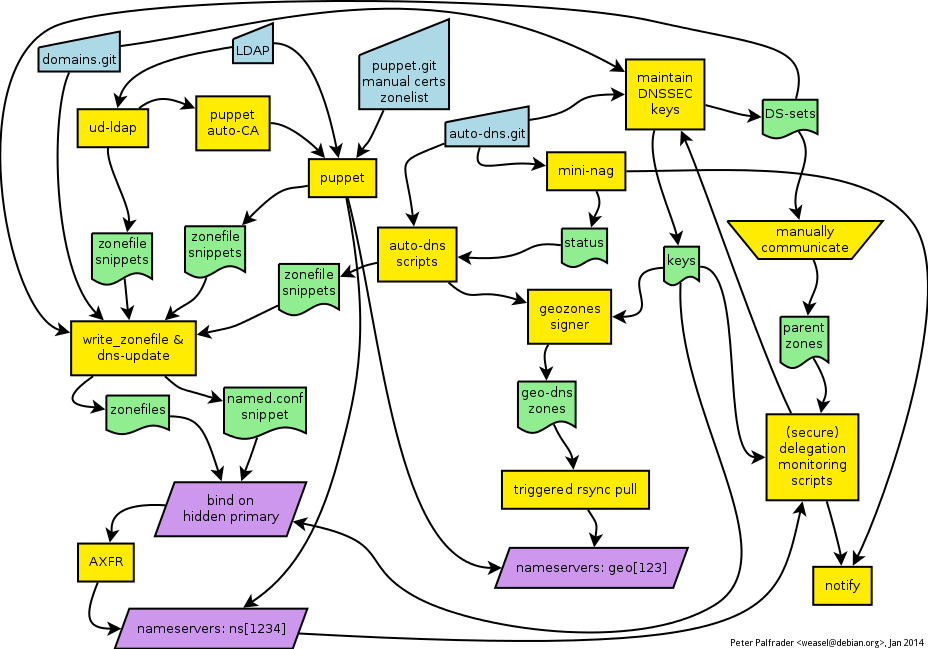

Figure 1: The Debian DNS Rube Goldberg Machine.

Once all the individual pieces of source information have been

collected, the dns-update and write_zonefile scripts from our

dns-helpers repository take over the job of building complete

zonefiles and a bind configuration snippet. Bind then loads the zones

and notifies its secondaries.

For geozones, the zonefiles are already produced by auto-dns'

build-zones and those are pulled from the geo nameservers via rsync

over ssh, after an ssh trigger.

and also DNSSEC

All of our zones are signed using DNSSEC. We have a script in

dns-helpers that produces, for all zones, a set of rolling signing

keys. For the normal zones, bind 9.9 takes care of signing them

in-process before serving the zones to its secondaries. For our

geo-zones we sign them in the classical dnssec-signzone way before

shipping them.

The secure delegation status (DS set in parent matches DNSKEY in child)

is monitored by a set of nagios tests, from both [dsa-nagios] and

dns-helpers. Of these, manage-dnssec-keys has a dual job: not

only will it warn us if an expiring key is still in the DSset, it can

also prevent it from getting expired by issuing timly updates of the

keys metadata.

Relevant Git repositories

[^ldap]: ldapsearch -h db.debian.org -x -ZZ -b dc=debian,dc=org -LLL 'host=master'

[^ldap2]: ldapsearch -h db.debian.org -x -ZZ -b dc=debian,dc=org -LLL 'dnsZoneEntry=*' dnsZoneEntry

-- Peter Palfrader